Jacob Devlin et.al. @ Google AI Language

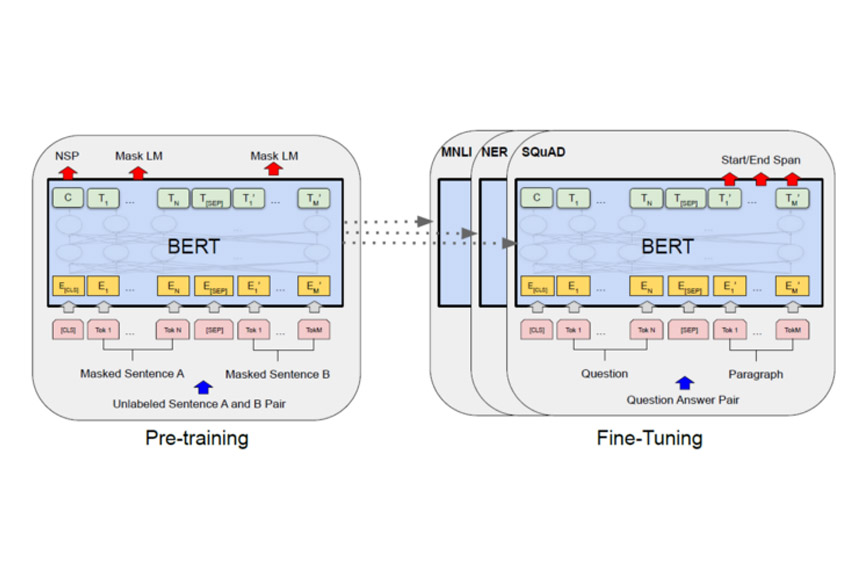

Overview:

Impact of dummy-token insertion on robustness of BERT pre-training

Objective:

BERT pre-training is based on identifying masked tokens. Assessed the impact of ‘dummy tokens’ insertion on the robustness of BERT pertaining

Environment: