AI-Based Object Detection and Text Extraction Technologies Have Revolutionized the Document Processing Domain

The short answer to the posed question in the title is “a lot”.

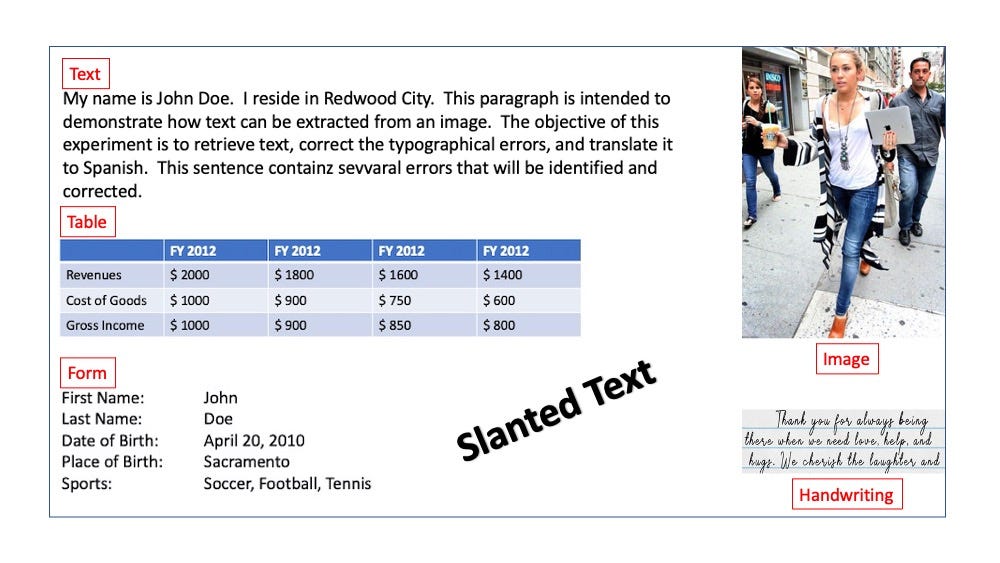

To set the stage for this article, I would like start with an example. Consider being tasked to analyze the scanned version of a company annual report (form 10-k). The document typically contains various types of fields such as pure text, form (name, position, and compensation of officers), tables (e.g. income statement, balance sheet), and even images. Just extracting the textual characters from the document is not nearly enough to conduct any meaningful analysis. As an example, it would be ideal to discern tables, and forms from normal paragraphs and transfer their content to a spreadsheet. Similarly, purely textual sections; such a “Management Discussion”, can be extracted and piped to a “Sentiment Analysis” engine to identify the tone of the discussion. Deep Learning-based image detection and language processing techniques can easily tackle this challenge.

Limitation of Optical Character Recognition Technologies

So why not use traditional Optical Character Recognition (OCR) to tackle this task?

OCR technology is not a new technology and has been successfully used for many decades. OCR has come a long way and can do a remarkable job of extracting text from a scanned document. Despite its maturity, OCR (in absence of post processing) is unable to discern between various types of fields in a heterogeneous document. As an example, OCR treats a table as a series of words and is unable to extract table’s structural information. The same holds true with forms. It fails to capture the relationship between the title and content of a given field. Furthermore, detecting an object in an embedded image is out of the question.

This is where Deep Learning-based object detection and language processing technology comes to rescue.

Framing the Task at Hand

This project entailed building a prototype of a Machine Learning pipeline able to ingest and process a stream of complex heterogeneous documents to extract and comprehend their constituent fields. The term “heterogeneous” is used here to indicate that a typical document contains, pure text, tables, forms, images, and handwritten notes. The specific use case for this project is for processing client application forms for a service-oriented company. The test document (see Figure 1) contains one textual paragraph, one form, one table, one image, one field containing handwriting, and a slanted textual field. The objective is as follows:

- Extract the contents of the form and the table and transform it to a spreadsheet (.csv file)

- Extract the textual paragraph located in the upper left corner of the document, correct the typographic errors, and translate the outcome to Spanish

- Recover the slanted text field

- Recover the handwritten field and convert to text

- Analyze an image to detect faces, objects, personalities (celebrities), signs, among many other categories

The sponsor of this effort had several goals in mind but elimination manual data entry errors was front and center.

Tools Used

We are fortunate since there is no shortage of Deep Learning libraries to tackle the main tasks of this project. Aside from open-source libraries, Amazon Web Services (AWS), Google Cloud Platform (GCP), and Microsoft (Azure) all offer well-tuned libraries for text, language, and image processing. Admittedly my experience has been mostly positive with all of these resources, each having certain strengths and weaknesses. The main tools used in this experiment were AWS Textract, AWS Rekognition, AWS Comprehendin addition to several open source libraries.

Results

Figure 2 depicts the result of form and table extraction, recovery of the slanted text, and finally the conversion of the handwritten note to text. I must say the extraction of tables and forms produced remarkable results regardless of how hard I tried to break it. This implementation, however, did have difficulty extracting the slanted text, especially when the tilt angle exceeded 30 degrees. This difficulty can be overcome by synthetically generating multiple rotated versions of the given field and selecting the one with highest confidence score.

The biggest challenge was making sense of the handwritten note. Multiple libraries were used but the results were less than what I would like to. Much work remains in this area.

Figure 3 depicts the results of text extraction, correction, and translation. The produced results were truly superb. As for the object detection however, there were couple of notable errors namely not detecting the third pair of “jeans” and the “iPad”. In production systems, the models can be fine-tuned to address domain specific objects and that invariably will yield better results.

It Is Not All Good

If you think that you can gain awesome results by simply feeding a PDF file to a model and make a few API calls, you are terribly wrong. I am always reminded of Chip Huyen’srecent tweet saying “Machine Learning Engineering” is 10% Machine Learning and 90% Engineering”. I wish she could share her secret on how she can get away with spending only 90% of the time on the engineering part. Admittedly using libraries such as AWS Comprehend and Textract can ease the burden, but still one cannot get away with a sizable amount of prep and cleanup work.

Can We Do Better?

The answer is a resounding “yes”. This undertaking has been merely a “proof of concept”. No attempt was made to optimize the process. I am confident a x5 improvement in latency and throughput can be achieved by employing one or more of the following techniques:

- Splitting the documents and using batch processing to process like fields of multiple documents

- Migrate to a distributed processing scenario instead of a round robin method

- Services such as AWS Comprehend and Textract offer both synchronous and asynchronous calls, where the latter will demonstrate far better outcomes. In synchronous mode (used in this experiment) the next library call can’t be made before the conclusion of the existing one